Unnikrishnan Kalidas

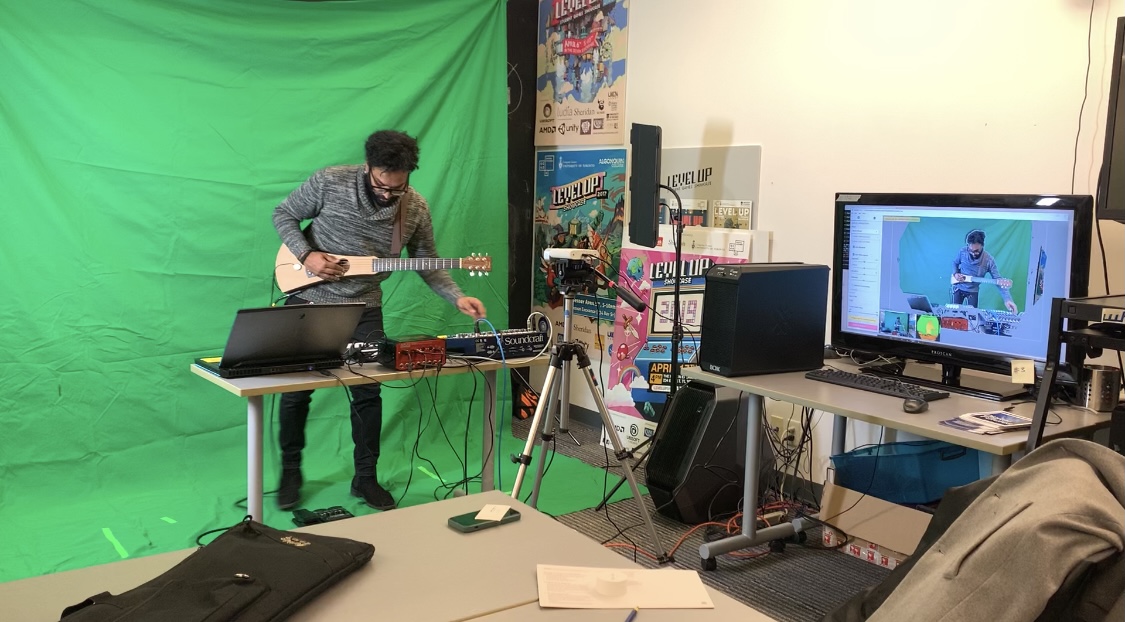

Seeing sound in a space is the primary idea of my Thesis, titled 'Music Visualization in a Performative setting'. With this experiment I attempt to create a visual representation of the sound I create by capturing the performance live with the help of a Kinect Azure SDK sensor, and feeding the pointcloud data into Touchdesigner. Within Touchdesigner I play with the pointcloud by transforming the point particle cloud to respond to live audio feed that I am performing in real-time. The combination of two inputs of data creates a soundscape that glitches at the interference of two sources of data. Since the beginning of my graduate course in Digital Futures, I have explored several ways of visualizing real-time performative music, and my explorations have currently got to this point of simulating the performance itself in a Volumetric format. The sound performed has a lot of reverb within it, in order to have a constant flow of data input to the audio analysis part of the Touchdesigner network, this in turn creates the reverb scape that forms the space of the performance and the audio.

- Categories:

- Project Url:

- Share Project :